The Coming of Local LLMs

While there’s been a truly remarkable advance in large language models as they continue to scale up, facilitated by being trained and run on larger and larger GPU clusters, there is still a need to be able to run smaller models on devices that have constraints on memory and processing power.

Being able to run models at the edge enables creating applications that may be more sensitive to user privacy or latency considerations - ensuring that user data does not leave the device.

This also enables the application to be able to always work without concerns over server outages or degradation, or upstream provider policy or API changes.

LLaMA Changes Everything

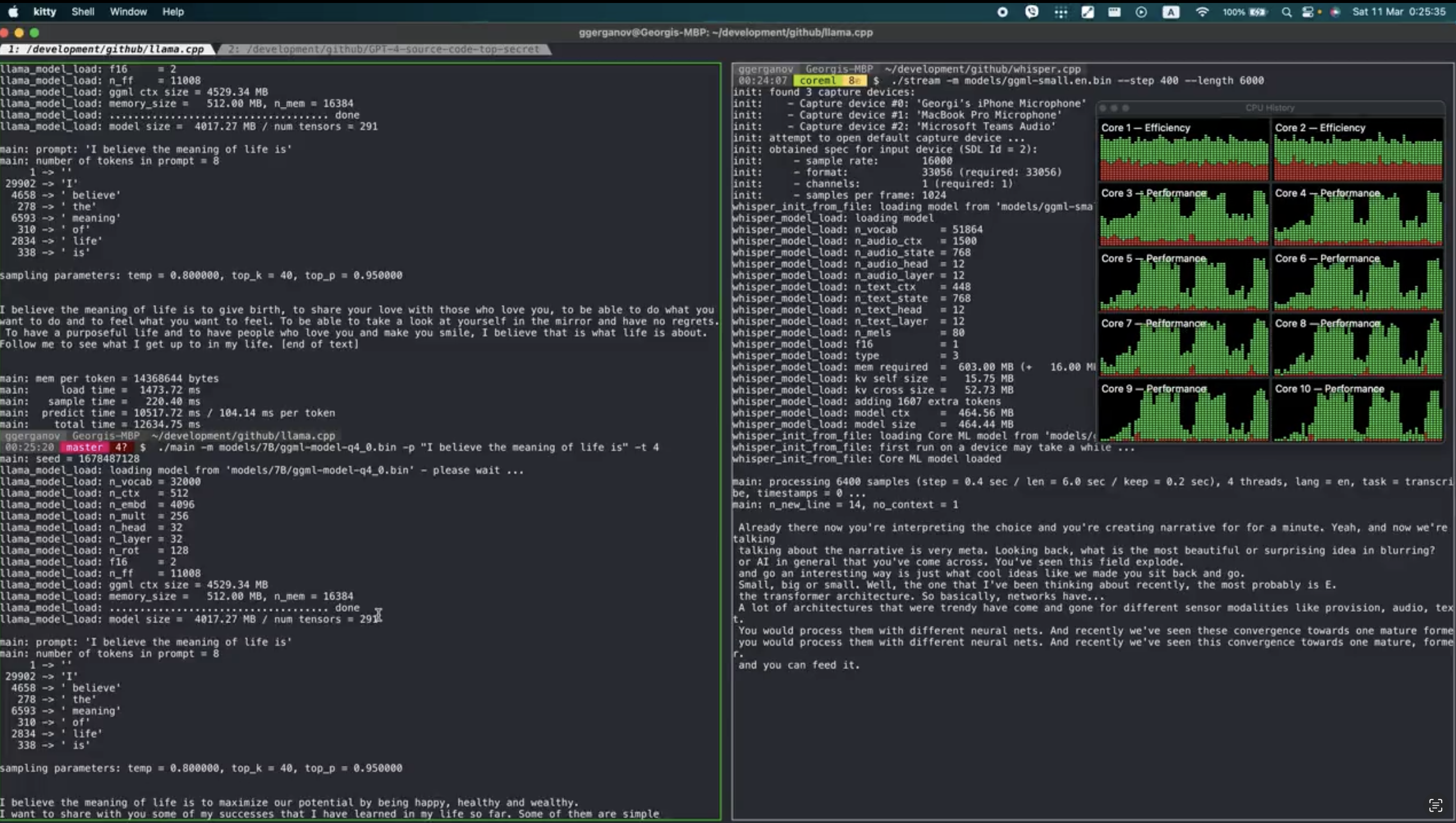

Recently, the weights of Facebook’s LLaMA model leaked via a torrent posted to 4Chan. This sparked a flurry of open-source activity, including llama.cpp. Authored by the creator of whisper.cpp, it quickly showed that it’s possible to get an LLM running on an M1 Mac:

Soon, Anih Thite posted a video of it running on a Google Pixel 6 phone. It was incredibly slow, at 1 token per second, but it was a start.

The next day, he posted a new video - showing it running at 5 tokens a second.

![]()

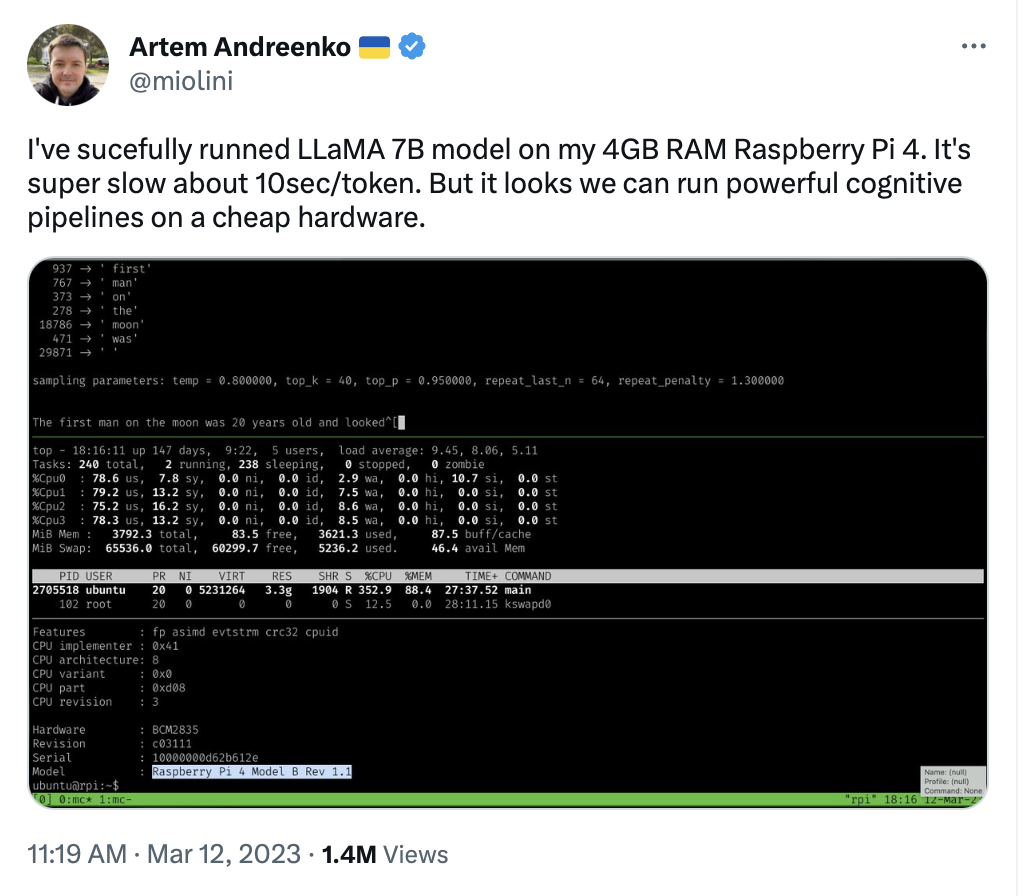

Artem Andreenko soon was able to get LLaMA running on a Raspberry Pi 4:

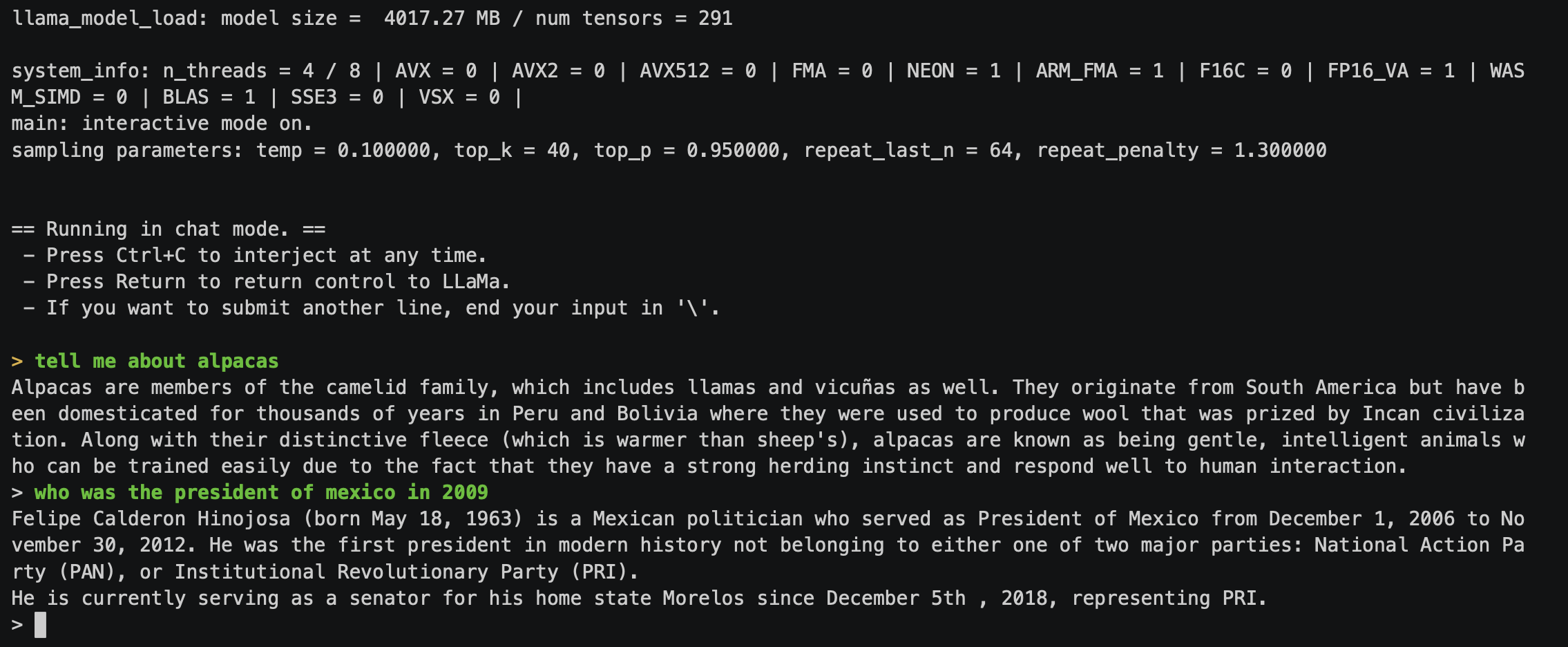

Kevin Kwok created a fork of llama.cpp that included an add-on for a Chat-GPT style interface:

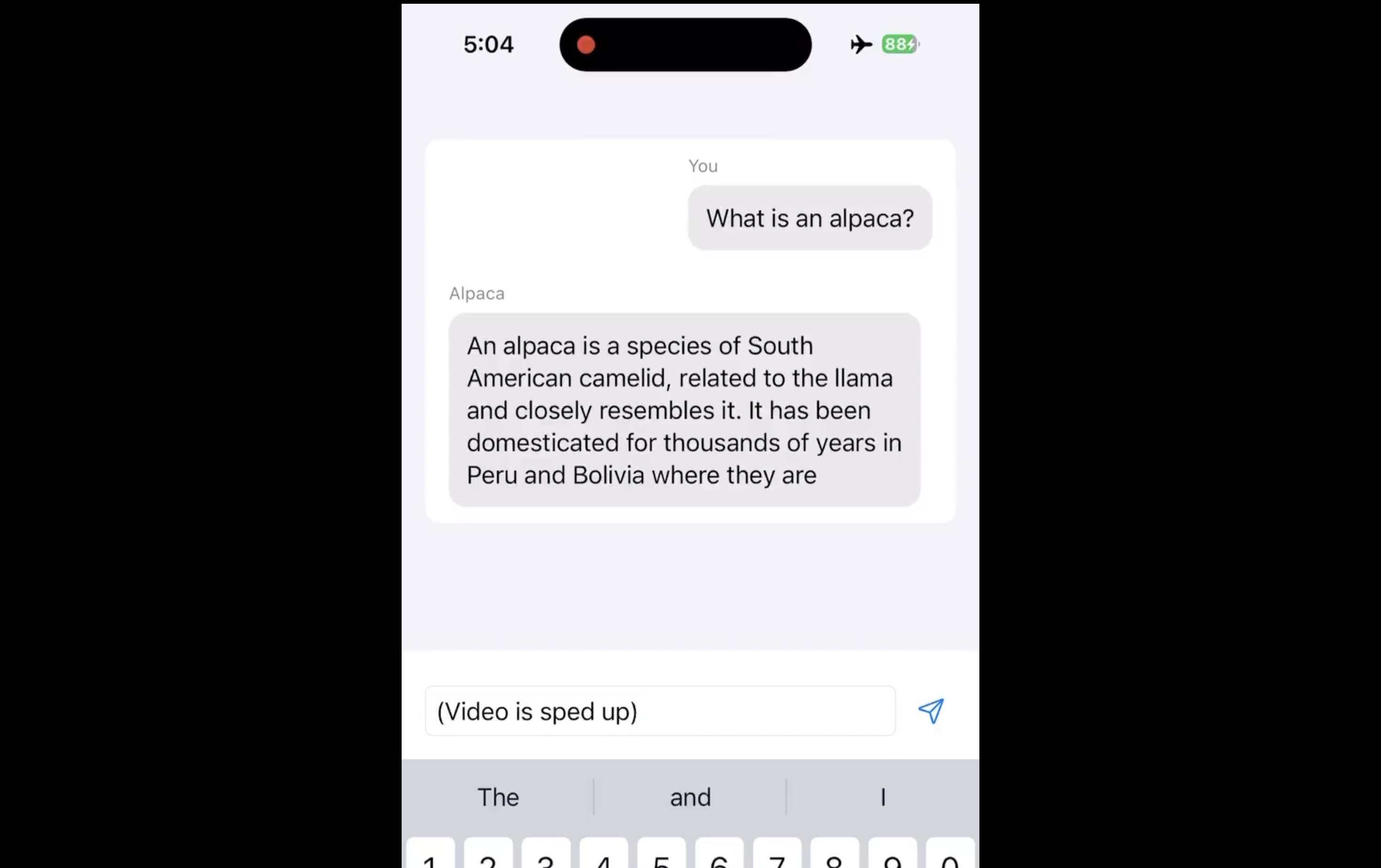

And finally, yesterday, he posted a demonstration of Alpaca running on an iPhone:

Consumer Electronics Companies and LLMs

With the rapid advances being made for running local LLMs in the open source community, the question is bound to be asked - what is Apple doing? While Apple does have significant ML capabilities and talent, a look at their ML Jobs Listings does not indicate they are actively hiring for any LLM projects. The cream of the crop of expertise in LLM’s are at OpenAI, Anthropic, and DeepMind.

Apple is generally always late to deploying big technological advancements into their products. If and when Apple does develop on-device LLM capabilities, it could either arrive in the form of CoreML models that are embedded in individual apps and come with different flavors; such as summarization, sentiment analysis, and text generation.

Or, alternatively, they could deploy a single LLM as part of an OS update. Apps could then interact with the LLM through system frameworks, similar to how the Vision SDK works.

This, to me, seems the more likely of the two approaches - both to be sure that each app on a user’s phone does not embed their own, large model bundled with them, and - more importantly - that would allow Apple to have a much more capable version of Siri.

It’s incredibly unlikely that Apple will ever license the use of LLMs from outside parties like OpenAI or Anthropic. Their strategy is much more the style of either building it all in-house, or to acquire small startups that they integrate into their products. This is what happened with the Siri acquisition.

One consumer electronics company that isn’t shy about having a partnership with OpenAI is Humane. They recently announced a $100M Series C round of financing. While still in stealth, they’re widely considered to be a laser-projector based AR wearable. Such a device would surely have major constraints on power, due to the nature of the laser projector. This would probably have a major restriction on running an embedded LLM, at least in their first version.

As part of their fundraising announcement, they did disclose that they’re partnering with several companies, including OpenAI. My guess is that they’re going to use a combination of Whisper and GPT4 for some kind of personal assistant as part of their hardware product. While Whisper has been shown to be quite capable of running on-device, it will probably be some time until a powerful language model will be able to do so in production.

Closing Thoughts

I think we’re going to eventually see a demo showing an open source model running on an iPhone as well. I don’t have intuition as to how long it will take until we start seeing these models being baked into production apps, and eventually, into the OS’s themselves. I do anticipate this to eventually happen, opening the door to extremely personal and personalized ML models that we will carry with us in our pockets - having intelligent assistants with us at all times.

If you’re doing work in the area of local LLMs, particularly ones that may be able to run on phones or other embedded devices, please reach out.